Error

Error is just as important a condition of life as truth

In the 90s, Korean Air had an extremely high number of plane crashes compared to the other companies of the time. You might think that its planes were old, that its pilots were poorly trained. No. Its problem was its ancient hierarchical culture. In it, you are required to be polite and respectful to your elders and superiors. It is what is known as the The Ethnic Theory.

On August 6th, 1997 Korean Air flight 801 was nearing “Guam International Airport” through rain and stormy clouds. The captain ordered the copilot to land in “Visual approach“, mode. This means that in order to carry out landing maneuvers, the runway must be visible to the crew. This was the conversation recorded in the cockpit:

First officer: Do you think it rains more in this area?

Captain: (silence)

Flight engineer: Captain, the weather radar has helped us a lot

Captain: Yes. They are very useful

In this brief conversation, the first officer was trying to warn the captain that a visual approach was not safe without an alternative plan to the airport. The flight engineer was trying to communicate the same thing to his captain, but their culture of respect to authority and fear of upsetting a superior prevented them from confronting him and contributed to the disaster that ended 254 lives.

Boeing and Airbus create modern and complex planes designed to be piloted by hierarchical equals. This works perfectly well in “low-power-distance” cultures like the American one, but in cultures with a “high-power-distance,” in which a pilot commits an error and his copilot doesn’t dare correct him, it’s a problem.

This story, written by Malcolm Gladwell appears in his FANTASTIC book “Outliers: The Story of Success“. Today I’d like to talk to you about interface design in systems in which a mistake would be critical. These are the systems you can find in a nuclear power plant, in a hospital operating room, or in a cockpit. An error in a system in which a mistake would be critical means the difference between life and death.

The Human Factor

Our language is an interface. We use it to communicate with other machines like our own, that is, with other humans. With most communication, this interface is useful to us and works correctly, but there are complex systems in which our language operates in life or death conditions. In our example, the system (the crew) sends an error message, and the message is not perceived as such by the person responsible for avoiding the catastrophe..

The majority of plane crashes take place during takeoff or landing. In most cases, these crashes cannot be attributed to one single cause. They are usually a combination of small, incremental mistakes. On many of these occasions, the mistakes are human ones. Even the best trained pilots are prone to boredom when they are doing routine tasks, and in emergency situations, their stress levels lead them to panic and to make bad decisions.

Automated systems, such as computers, are extremely competent when doing repetitive tasks, but when an unforeseen situation arises and corrective measures are needed, these systems do not react well. We humans are not good at doing repetitive tasks, but we are good at analyzing unforeseen situations and taking corrective measures. That is why we are essential components of systems in which a mistake would be critical.

Even though we are essential components, we are the weakest links in the chain. In systems in which a mistake would be critical, hardware components are considered safe if their failure margins are around the magnitude of 10-6 or lower. The success limit for a human operator working in ideal conditions is 10-4. The following table shows the “General Human-Error Probability Data in Various Operating Conditions“, taken from the book A Guide To Practical Human Reliability Assessment

| Description | Error Probability |

|---|---|

| General rate for errors involving high stress levels | 0.3 |

| Operator fails to act correctly in the first 30 minutes of an emergency situation | 0.1 |

| Operator fails to act correctly after the first few hours in a high stress situation | 0.03 |

| Error in a routine operation where care is required | 0.01 |

| Error in simple routine operation | 0.001 |

| Selection of the wrong switch (dissimilar in shape) | 0.001 |

| Human-performance limit: single operator | 0.0001 |

| Human-performance limit: team of operators performing a well designed task | 0.00001 |

The main causes of operators’ mistakes are stress and repetitive, routine actions (DOC). But a good interface design can improve an operator’s proper intervention and save several hundred lives.

Usability vs. Safety

Safety in systems in which a mistake would be critical is the interface’s main objective —protecting users against errors. Usability is a complementary objective, but it is not the main one. Usability will reduce operators’ anxiety and will make them feel more comfortable, but there has to be a compromise between the features that make the interface safer and those that make it more usable.

Imagine a system which allows one to conduct an important operation simply by pushing the “Enter” key a certain number of times. This would be a very usable system, but in safety-critical systems, it allows operators to skip steps in which important security checks are conducted. This system was called Therac-25 and it would not be important if not for the fact that this Therac-25 was a radiation therapy machine and its operators were doctors who administered lethal doses of radiation to their patients. To cite Wikipedia:

These accidents highlighted the dangers of software control of safety-critical systems, and they have become a standard case study in health informatics and software engineering.

Wikipedia

Non-expert Users

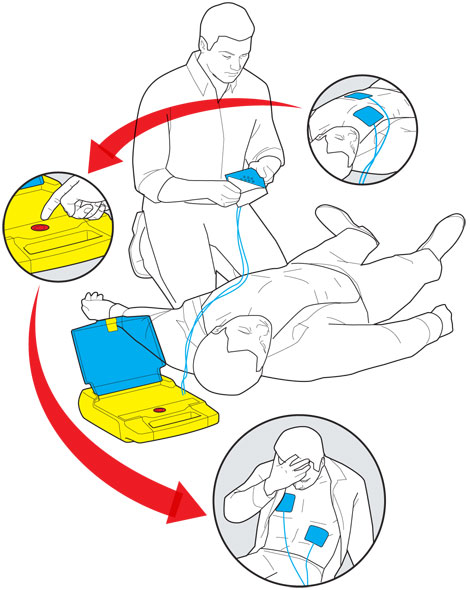

In aviation and in hospitals, the interfaces of these critical systems have been designed for expert users, but what happens when non-expert users are forced to use these kinds of systems?. You can’t think of any? How about a portable defibrillator, the ones that are becoming so fashionable now in offices and public places?

In these cases simplifying the interface is extremely important- realization in a simple and effective design. Tom Kelley CEO of Ideo, discussed how one of his first versions of the portable defibrillator looked like a brick and was opened like a laptop, but this was not clear to users:

“We’re in a situation where seconds make the difference between life and death. If it takes and extra 10 seconds to open the latch, that’s a terrible design”

Tom Kelley

Defibrillators are used by cardiologists who are trained to operate the device. Even more importantly, they determine if the victim is suffering a heart attack or not, before administering an electric shock. This use and these verifications should be transferred to an interface in which non-expert users can properly use it. The creators of the portable defibrillator set some basic premises for the device’s design:

“The device must communicate immediately what it is, exactly what to do, and help users do it quickly, confidently, and accurately in socially and emotionally charged circumstances”

And from a brick format, it became a simple 1-2-3 process.

Other design decisions were that, due to the chaotic situation operators found themselves in, their judgment could not be trusted to determine if the victim was suffering a heart attack or not. Therefore, the device includes a functionality with which information is obtained from the electrodes located on the victim, and if the victim really is suffering cardiac arrest, the electric shock is given. Also, in the latest designs, audio instructions are included that tell the operator how to act. A curious fact is that the voice selected is that of Peter Thomas, British announcer of the show NOVA, who is known for his calm voice.

Systems in which a mistake would be critical constitute an important field of study for HCI. And more importantly, these studies will undoubtedly have an effect on reducing human error in non-critical systems.

Follow @NoamMorrissey Tweet